What Is Data Extraction?

Data Extraction

However, the information is transported from the source system to the data warehouse through a single Oracle Net connection. For larger data volumes, file-primarily based knowledge extraction and transportation strategies are sometimes more scalable and thus more acceptable. Specifically, a data warehouse or staging database can directly access tables and information located in a connected source system. Gateways permit an Oracle database (corresponding to a knowledge warehouse) to access database tables stored in distant, non-Oracle databases.

Data Sources

If, as part of the extraction course of, you need to take away delicate information, Alooma can do this. Alooma encrypts knowledge in motion and at rest, and is proudly 100% SOC 2 Type II, ISO27001, HIPAA, and GDPR compliant. Usually, you extract knowledge in order to move it to another system or for information analysis (or each).

Personal Tools

Depending on the chosen logical extraction technique and the capabilities and restrictions on the source facet, the extracted information could be bodily extracted by two mechanisms. The knowledge can both be extracted on-line from the supply system or from an offline structure.

Tools

And for businesses with franchises or multiple places, you should use data scraping instruments to maintain monitor of how team members are partaking and interacting on social media. Equity researchers, traders, and small businesses alike want to review the monetary markets to help inform their investments and see how their property are performing. Instead of analyzing individual statements and information, and performing market research on different assets, use information extraction to deal with these tasks without slowing down your productivity. This course of saves you time and assets whereas giving you that priceless data you’ll must plan ahead. Depending on the tools you employ, it can manage your knowledge into a extremely usable and priceless useful resource so you possibly can improve everything in your small business, from productivity to market analysis. There are many web scraping tools to choose from, which can result in confusion and indecision regarding which is one of the best for your organization when wanted to extract knowledge. basically includes utilizing tools to scrape via online sources to gather info you want.

And for businesses with franchises or multiple places, you should use data scraping instruments to maintain monitor of how team members are partaking and interacting on social media. Equity researchers, traders, and small businesses alike want to review the monetary markets to help inform their investments and see how their property are performing. Instead of analyzing individual statements and information, and performing market research on different assets, use information extraction to deal with these tasks without slowing down your productivity. This course of saves you time and assets whereas giving you that priceless data you’ll must plan ahead. Depending on the tools you employ, it can manage your knowledge into a extremely usable and priceless useful resource so you possibly can improve everything in your small business, from productivity to market analysis. There are many web scraping tools to choose from, which can result in confusion and indecision regarding which is one of the best for your organization when wanted to extract knowledge. basically includes utilizing tools to scrape via online sources to gather info you want.

Data Extraction Defined

It lets you retrieve relevant information and search for patterns to combine into your workflow. In this weblog, we have discovered the data extraction process utilizing R programming and the completely different steps concerned within the information extraction course of. In step one, we mentioned the method of cleaning information in R using totally different techniques which are used to remodel a dirty dataset into a clear or tidy one, making it straightforward to work with. After knowledge cleaning, in the next step, we carried out numerous operations for information manipulation in R and also data manipulation in R with dplyr bundle. The same scraper you simply created might not work two days later, and you have to hold fixing the bugs whenever needed. On the flip side, the bills of outsourcing are burdensome for individuals and small companies. Web knowledge extraction additionally is called web scraping or web harvesting which is used for extracting a large amount of knowledge from websites to local computers or databases. In data extraction, the initial step is knowledge pre-processing or knowledge cleansing.

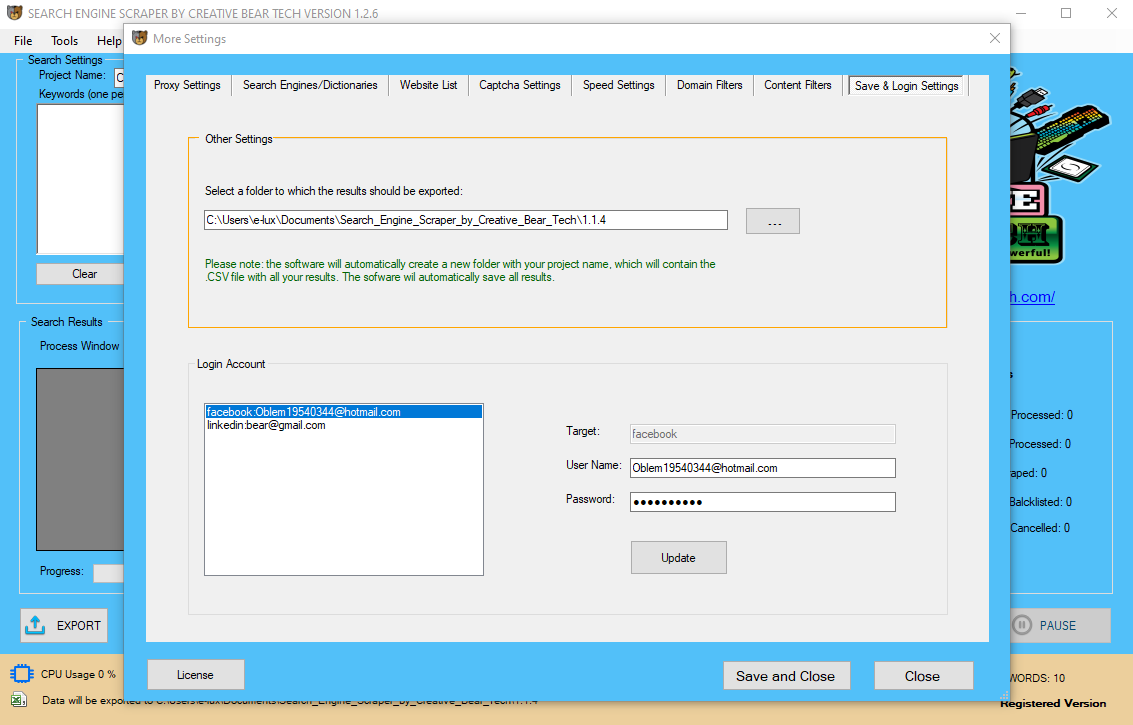

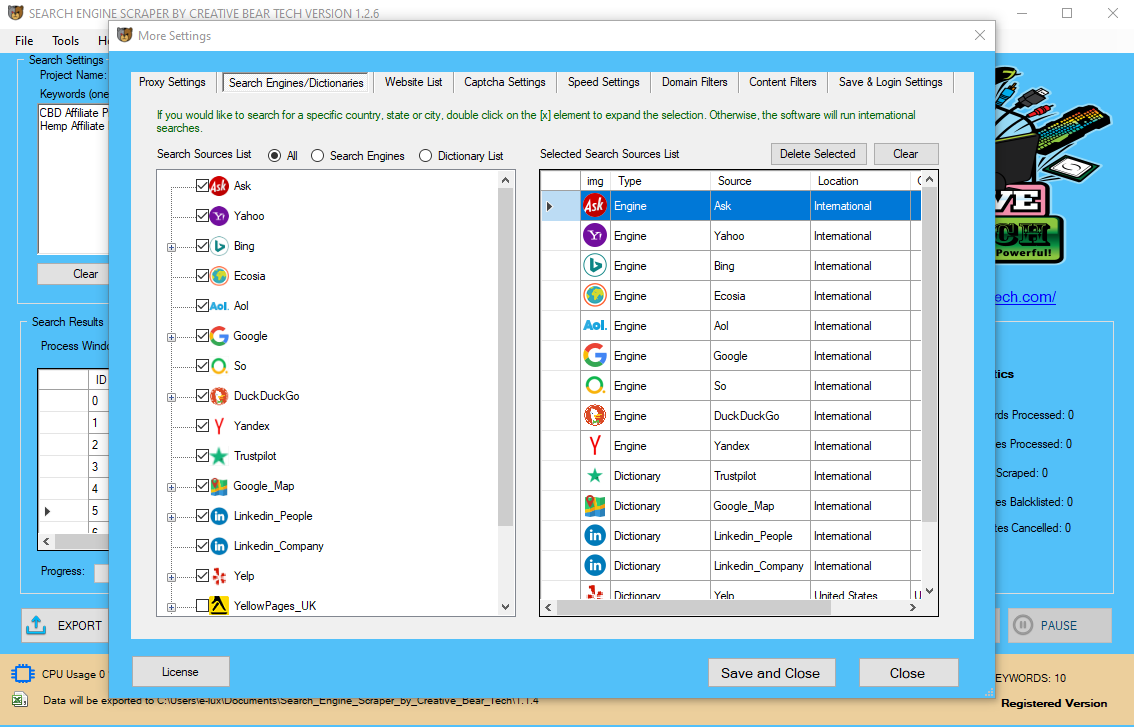

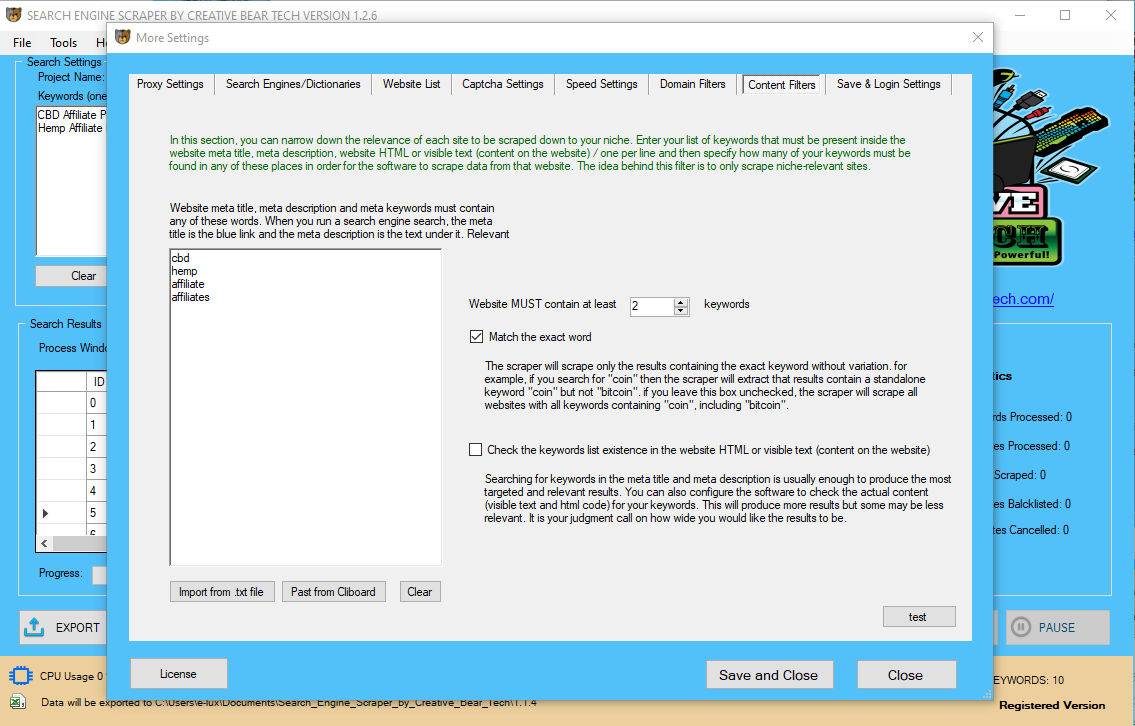

Search Engine Scraper and Email Extractor by Creative Bear Tech. Scrape Google Maps, Google, Bing, LinkedIn, Facebook, Instagram, Yelp and website lists.https://t.co/wQ3PtYVaNv pic.twitter.com/bSZzcyL7w0

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

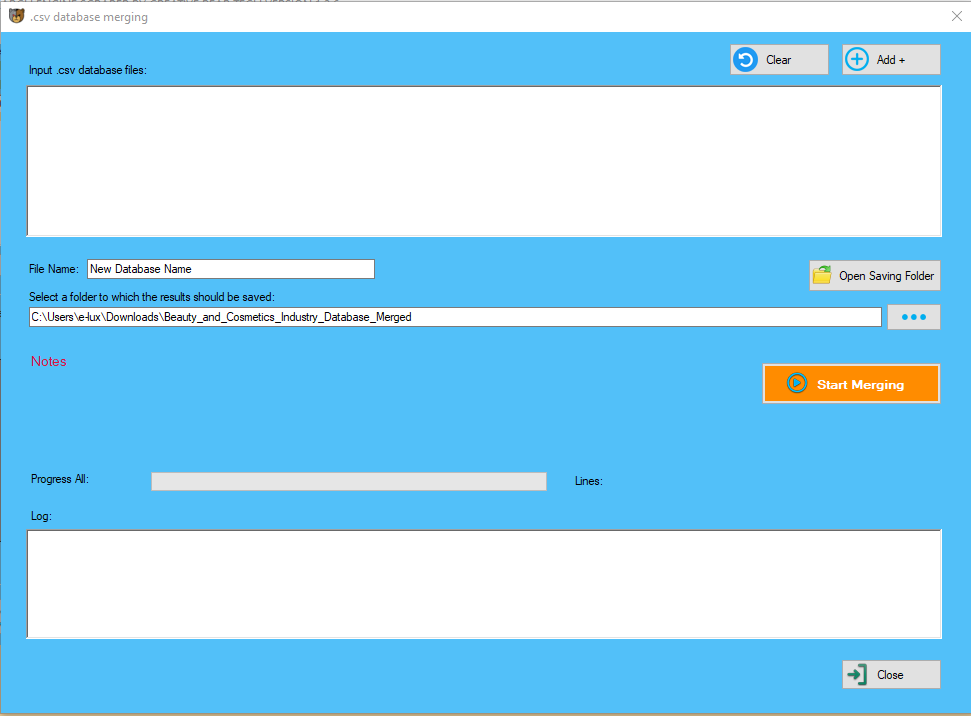

It is principally used for disparate web scraping, e mail id extraction, phone number extraction, picture extraction, doc extraction, and so on. This is a quite simple and straightforward-to-use internet scraping tool obtainable within the trade. ICR converts pictures of handprinted textual content to an editable and/or searchable file format. There are every kind of tools for extracting unstructured data from files that can't be reused similar to a PDF or websites run by governments and organizations. Some are free, others are charge primarily based and in some cases languages like Python are used to do that. This is a desktop application for Mac OSX, Windows, and Linux, which helps firms and individuals to convert PDF information into an Excel or CSV file which may be simply edited. This is one of the strongest web scraping tools which can seize all of the open information from any web site and also save the person the effort of copy-pasting the information or any kind of further coding. This is mainly used to extract IP addresses, disparate data, e-mail addresses, telephone numbers, internet information, and so on. enhance your productivity by utilizing a knowledge extraction tool to perform these activities and maintain your business operating smoothly. Data extraction automates the method so that you instantly get the latest information on your opponents without having to hunt it down. is a no-brainer for giant scale extraction from a lot of webs sources. It not solely possesses all of the options of a median scraper but additionally surpasses most instruments with its comprehensiveness. Speaking to net knowledge extraction, you would possibly shortly bounce to the conclusion that that is another privilege for the techie. In many instances this is probably the most challenging facet of ETL, as extracting data accurately will set the stage for a way subsequent processes will go. For our example, I will name this desk “Sample Data extraction” and now click subsequent button and at last click the Finish button on the final web page of the information extraction wizard. Now page 7 of the information extraction wizard will open up where you can change the settings of the desk and provides your information extraction desk a name. On page 6 of the Data extraction wizard, you'll only see two checkboxes. The first checkbox “Insert data extraction table into drawing” will allow you to make an AutoCAD table proper contained in the drawing.

usually discover themselves exhausting to compete with business giants despite the fact that they've ingenious ideas. The purpose is easy, these tech giants hold much more information than all other companies combined. Data extraction provides you more peace of thoughts and control over your small business without having to hire additional palms to handle your information needs. And perhaps best of all, this foundation of information extraction can scale and expand with your corporation as you grow. When you are accomplished making modifications to this table click Next button and you'll attain web page 6 of data extraction wizard. Now you'll have page 5 of the info extraction wizard which can present you the final table. We can modify this desk the way we would like and right here too we will make some customizations. You could make the properties listing even shorter by unchecking the properties that you simply don’t want within the information extraction desk from the Properties panel of web page four of the info extraction wizard. To avoid overwhelming amounts of knowledge in our knowledge extraction desk I will uncheck some categories from the class filter and the properties from these categories will disguise from the properties panel. Web data extraction appears nice on paper however faces many challenges in practices. Despite a big scope of programming knowledge required to construct a scraper, you would possibly want to think about the effort and time you placed on the maintenance. You can also add a number of drawings to extract knowledge for the information extraction table. To add a number of drawings, click the “Add Drawings” button and choose drawings from where you wish to extract data and click Open Button. In this case, I will uncheck all of the blocks that start with A$C prefix as they're automatically created blocks which we don’t wish to use in our data extraction desk. The Window will go through a loading course of to a new window referred to as Data Extraction – Select Objects (Pages 3 of eight) as shown in the picture below. As we will be extracting knowledge from a single drawing we is not going to use choices C from web page 2 of data extraction wizard. This really places into perspective how speeding up a few tasks with information extraction could be hugely useful for small businesses. There are quite a number of benefits to using knowledge extraction to hurry up and automate workflows, especially for small businesses. Such an offline structure may already exist or it may be generated by an extraction routine. This influences the transportation method, and the need for cleaning and reworking the data. This influences the supply system, the transportation course of, and the time wanted for refreshing the warehouse.

- When mixed with our enterprise course of outsourcing, the result is excessive influence with minimal disruption.

- What makes DOMA completely different is that we offer greater than a single targeted software.

- We integrate multiple forms of data extraction instruments to create holistic solutions that can tackle bigger challenges within your small business.

- Data extraction is a key part in a fully realized data administration technique.

If you propose to investigate it, you might be probably performing ETL so that you can pull information from a number of sources and run analysis on it collectively. The problem is making certain that you could be a part of the information from one source with the data from other sources so that they play well collectively. In information cleaning, the duty is to rework the dataset into a basic form that makes it easy to work with. One attribute of a clean/tidy dataset is that it has one remark per row and one variable per column.

Sneak Peek Preview of the next update to the search engine scraper and email extractor ???? ???? ????

— Creative Bear Tech (@CreativeBearTec) October 15, 2019

Public proxy support and in-built checker

Integration of TOR browser

Ability to grab business name from Facebook

Download your copy at https://t.co/wQ3PtYVaNv pic.twitter.com/VRAQtRkTTZ

After the extraction, this information can be transformed and loaded into the info warehouse. I hope you will discover this tutorial useful in case you have any question related to this tool be happy to let me know within the comment part under and I will strive my greatest to answer it. Whether your run an IT agency, real property companies or some other sort of business that handles knowledge and documents, here are a few examples of knowledge extraction being used in a business. AWS Textract is a service that mechanically extracts textual content and information from scanned documents. This a part of the info extraction process uses machine studying to instantly “read” just about any type of doc to precisely extract textual content and data with out the necessity for any manual effort or custom code. Data extraction is the method of lifting unstructured information out of your documents to be able to successfully integrate, analyze, and apply it. Import.io is a free online device, but there is additionally a payment-based model for firms. This aids structured extraction of information and downloading in CSV format or producing an API with the data. The extraction technique you need to select is very dependent on the supply system and also from the business wants in the goal knowledge warehouse surroundings. Very often, there’s no chance to add further logic to the source methods to enhance an incremental extraction of information Mining Data with Proxies as a result of efficiency or the increased workload of these methods. Sometimes even the customer is not allowed to add something to an out-of-the-field software system. Extraction is the operation of extracting knowledge from a supply system for additional use in an information warehouse setting. The subsequent web page i.e. page 2 of Data Extraction wizard has the Panel on top called “Data source” which entails choosing whether or not we wish to extract knowledge from the whole drawing, or only a particular part. For this example, let’s say we're thinking about creating, for whatever cause, a desk that lists all the properties together with the depend of blocks used in our drawing. The workflow of doing this utilizing data extraction is explained beneath. This is the best methodology for transferring data between two Oracle databases as a result of it combines the extraction and transformation into a single step, and requires minimal programming. Some source techniques would possibly use Oracle range partitioning, such that the source tables are partitioned along a date key, which allows for straightforward identification of latest knowledge. For example, if you are extracting from an orderstable, and the orderstable is partitioned by week, then it is simple to establish the present week’s information. Each of those strategies can work in conjunction with the data extraction method discussed previously. For example, timestamps can be used whether or not the info is being unloaded to a file or accessed through a distributed query. In information manipulation, the task is to change the data to make it easier to learn and more organized. Data manipulation is also used with the term ‘information exploration’ which entails organizing knowledge using the obtainable sets of variables. Among the opposite steps of a evaluation project, it facilitates information extraction and data synthesis.

Exercise at Home to Avoid the Gym During Coronavirus (COVID-19) with Extra Strength CBD Pain Cream https://t.co/QJGaOU3KYi @JustCbd pic.twitter.com/kRdhyJr2EJ

— Creative Bear Tech (@CreativeBearTec) May 14, 2020

With the default choices selected merely click on Next on page 2 of the wizard. Here you'll be able to choose to extract data from blocks, Xref and embrace Xref as blocks in the data extraction table. You also can select to extract information from only model house of the entire drawing utilizing the choices within the “Extract from” panel. You could make extra settings within the information extraction table by clicking the Settings button as proven in the choice D of the determine above. I am a digital advertising chief for global inbound advertising in Octoparse, graduated from the University of Washington and with years of experience within the massive information industry. I like sharing my thoughts and ideas about data extraction, processing and visualization. Today I wish to share with you how one can leverage net information extraction to cope with some painful problems that you encounter in your business. Extracting excessive-quality and related information helps create a more reliable lead technology system, which reduces your advertising spend. When you understand the leads you’re collecting are proper for your business, you possibly can spend less money and time making an attempt to entice them to buy your services and products. Traditional ICR engines vary in accuracy and server necessities, and there are new, cloud-based and machine learning-trained ICR engines are hitting the market on a reasonably common basis. OMR captures data from kind parts similar to checkboxes and multiple-alternative bubbles. Form variations and versions, poor scan high quality, fax conversions and extra can all impact the structure of data that exists on a form. Data extraction goes some method to providing you with peace of mind (and more control) over your business, with out having to rent more staff to handle all of your data. Once you start exploring the probabilities of knowledge extraction, you’ll remember to discover a use for it within your individual Website Scraper enterprise. If you intend to analyze it, you might be probably performing ETL (extract, transform, load) so that you can pull information from a number of sources and run evaluation on it together. Structured knowledge – when the method is typically performed inside the source system. Excel is probably the most primary device for the administration of the screening and knowledge extraction phases of the systematic review process. Customized workbooks and spreadsheets can be designed for the evaluate process. A more advanced strategy to utilizing Excel for this function is the PIECES approach, designed by a librarian at Texas A&M. The PIECES workbook is downloadable atthis information. Data in a warehouse may come from different sources, an information warehouse requires three totally different strategies to make the most of the incoming data. These processes are often known as Extraction, Transformation, and Loading (ETL). ScraperWiki is the right software for extracting knowledge arranged in tables in a PDF. If the PDF has multiple pages and numerous tables, ScraperWiki offers a preview of all of the pages and the various tables and the ability to download the data in an orderly means and separately. Table Capture is an extension for the Chrome browser, which provides a user with knowledge on a web site with little difficulty. It extracts the information contained in an HTML table of an internet site to any information processing format corresponding to Google Spreadsheet, Excel or CSV. Octoparse is a no-brainer for big scale extraction from lots of webs sources. As the best Chrome extension knowledge extraction software, it helps you construct a sitemap to determine how a website online should be traversed and what elements ought to be extracted. Covidenceis a software platform constructed particularly for managing every step of a systematic review project, including information extraction. Read more about how Covidence can help you customize extraction tables and export your extracted information.  The dplyr bundle contains varied functions which might be particularly designed for data extraction and information manipulation. These features are preferred over the base R capabilities because the previous process knowledge at a faster fee and are generally known as the best for knowledge extraction, exploration, and transformation. The source methods for a knowledge warehouse are typically transaction processing applications. For instance, one of the source systems for a gross sales analysis knowledge warehouse might be an order entry system that data the entire current order activities. The first part of an ETL process entails extracting the information from the source systems. This is among the most well-identified visible extraction instruments available in the market which can be utilized by anyone to extract data from the net. The device is mainly used to extract images, e-mail ids, paperwork, internet knowledge, contact data, cellphone numbers, pricing details, etc. This is one other well-liked tool utilized by companies which primarily acts as a visual web scraping software, web knowledge extractor, and a macro recorder. Data is typically analyzed after which crawled through to be able to get any relevant info from the sources (corresponding to database or document). whereas the flight ticket shall be very low-cost if the vacation spot is not well-liked. You can use web information extraction to trace costs on promotion events, like Black Friday. This lets you sustain with the modifications and adjust your pricing technique in a well timed manner. Usually, the term data extraction is utilized when (experimental) information is first imported into a pc from major sources, like measuring or recording units. Today's digital gadgets will usually current an electrical connector (e.g. USB) by way of which 'uncooked data' could be streamed into a private laptop. Data extraction can be a highly effective device in data governance for both federal and business entities. Our group is equipped to do the heavy lifting at each stage that means you possibly can totally outsource your information extraction without needing to build an in-home solution. When these factors come into play, templatized data extraction simply doesn’t minimize it. Monthly, weekly or daily data extraction might help you run advert hoc reviews for your corporation and keep up to the mark. Use the information in your favor - carry out e-commerce market analysis, gather social media information, combination content material and generate leads. Data extraction scheduling lets you run ad hoc reports for your corporation and keep up to the mark. Though technically this was an academic study, it is a incredible instance of how pairing up disparate sources of extracted knowledge can lead to fascinating insights. View their short introductions to data extraction and evaluation for extra information. SRDR(Systematic Review Data Repository) is a Web-primarily based device for the extraction and management of information for systematic evaluate or meta-evaluation. It can also be an open and searchable archive of systematic reviews and their data. Access the "Create an Extraction Form" part for more data. Alooma is a cloud-primarily based ETL platform that makes a speciality of securely extracting, remodeling, and loading your data.

The dplyr bundle contains varied functions which might be particularly designed for data extraction and information manipulation. These features are preferred over the base R capabilities because the previous process knowledge at a faster fee and are generally known as the best for knowledge extraction, exploration, and transformation. The source methods for a knowledge warehouse are typically transaction processing applications. For instance, one of the source systems for a gross sales analysis knowledge warehouse might be an order entry system that data the entire current order activities. The first part of an ETL process entails extracting the information from the source systems. This is among the most well-identified visible extraction instruments available in the market which can be utilized by anyone to extract data from the net. The device is mainly used to extract images, e-mail ids, paperwork, internet knowledge, contact data, cellphone numbers, pricing details, etc. This is one other well-liked tool utilized by companies which primarily acts as a visual web scraping software, web knowledge extractor, and a macro recorder. Data is typically analyzed after which crawled through to be able to get any relevant info from the sources (corresponding to database or document). whereas the flight ticket shall be very low-cost if the vacation spot is not well-liked. You can use web information extraction to trace costs on promotion events, like Black Friday. This lets you sustain with the modifications and adjust your pricing technique in a well timed manner. Usually, the term data extraction is utilized when (experimental) information is first imported into a pc from major sources, like measuring or recording units. Today's digital gadgets will usually current an electrical connector (e.g. USB) by way of which 'uncooked data' could be streamed into a private laptop. Data extraction can be a highly effective device in data governance for both federal and business entities. Our group is equipped to do the heavy lifting at each stage that means you possibly can totally outsource your information extraction without needing to build an in-home solution. When these factors come into play, templatized data extraction simply doesn’t minimize it. Monthly, weekly or daily data extraction might help you run advert hoc reviews for your corporation and keep up to the mark. Use the information in your favor - carry out e-commerce market analysis, gather social media information, combination content material and generate leads. Data extraction scheduling lets you run ad hoc reports for your corporation and keep up to the mark. Though technically this was an academic study, it is a incredible instance of how pairing up disparate sources of extracted knowledge can lead to fascinating insights. View their short introductions to data extraction and evaluation for extra information. SRDR(Systematic Review Data Repository) is a Web-primarily based device for the extraction and management of information for systematic evaluate or meta-evaluation. It can also be an open and searchable archive of systematic reviews and their data. Access the "Create an Extraction Form" part for more data. Alooma is a cloud-primarily based ETL platform that makes a speciality of securely extracting, remodeling, and loading your data.